real estate technology

Searching for the “holy-grail” in PropTech: the “all-in-one” real estate vertical.

Sometimes referred to as an “operating system” for Real Estate, the idea is to build a single application that provides a software solution for every stage of the real estate sales process.

Creating the Real Estate vertical - a new approach

by Mark Blagden, AccountTECH

Existing Landscape

For over 20 years, the “holy-grail” in Real Estate software engineering has been the “all-in-one” real estate vertical. Sometimes referred to as an “operating system” for Real Estate, the idea is to build a single application that provides a software solution for every stage of the real estate sales process. The tools available to software designers have evolved to the point that this is finally possible - and maybe not even too hard. The challenge now is no longer technical. Now what is needed is finance and leadership. To create and grow a Real Estate vertical that is both flexible and adaptive, it will take technology investors that can provide funding to create the data structure that will make consolidations & integrations possible. And it will take leadership to help dev teams understand that the code they need to write for integration will increase the long-term viability of whatever program they have written.

There are hundreds of real estate software applications because there are dozens of distinct tasks that need automation in the Real Estate industry. In every application category there are development teams that are specialists in their field. They build the best-in-class software in their specialized fields. Examples are SkySlope, DotLoop or AppFiles in Transaction Management or AccountTECH or LoneWolf in accounting.

The best software programs do not attempt to be all things to all users, but instead are specialists in their specific niche. Generally, software programs that appear in the market and purport to do “everything” are not particularly deep in any of their features. Inevitably they provide features that are limited scope and usually are cobbled together with dependencies on outside programs like Quickbooks or ZipForms or Docusign. Best-in-class software developers stick to their knitting. So for companies like my company, AccountTECH in accounting or Testimonial Tree for gathering user recommendations or Move Easy for concierge services - we stick to writing code for what we know - as a result, we have the most in-depth, comprehensive and flexible software in our respective categories.

The way forward

API technology in Real Estate is currently the newest technology for passing information between disconnected applications. It allows us to pull data from MLS or other remote data-stores like SkySlope or eRelocation. But this technology could allow investors to buy multiple, diverse real estate software applications and easily accomplish three valuable outcomes. First, API technology can easily allow their acquisitions to be fully integrated into a real estate vertical. Secondarily, it would enable investors to continue reaping the ARR from the software programs as they exist from users who want to continue using the “old software we have always used”. Finally an API foundation can make it simple to add the essential functionality of the acquired software program into their evolving real estate vertical. This final possibility is how we built our latest software program called darwin.cloud - and we will explore this later in this article.

Benefits and downsides of current API implementations

Using API technology, our software developers can gather information from the MLS and add it to our accounting software database. In turn, we can take the data collected from the MLS API and push it (via API) into the databases of the major national franchises. Then, other software programs like AdWerx or Delta Media or MoveEasy can use our API to get data from AccountTECH software to help automate processes in their software.

For developers, the key benefit of API technology is that we don’t need to understand the complex database structure of another software company’s software. For instance, instead of our programmers needing to learn anything about some MLS regions software, the MLS will tell us that their MLS has an API feature (called an end point) named: GetListingData. So we just write one line of code that “calls” the end point: GetListingData and the MLS gives us all the data for whatever MLS ID# we request. That simplicity makes software-to-software data integration possible and fast. All modern real estate software (like AccountTECH) has API technology built in for both receiving and sending data.

What makes API technology integrations time-consuming, labor intensive and slow is the need to MAP the data from software to software - and the lack of any universal standardization in the API data available. Everytime a programmer makes an API call, they retrieve lots of data organized in columns and rows - and each column has a header that describes the data. For example: ListDate, MLSnumber, ListingPrice. The time-consuming issue is that in our software we have fields called: ListingDate, MLS_num, AskingPrice. This means someone on staff needs to create a “MAP” between the field names from the MLS and our field names. Additionally, there is always a data TYPE issue to consider. The MLS data may be “typed” like this:

ListDate - text value

MLSnumber - integer number

ListingPrice - text value

And for our accounting system we need:

ListDate - date value

MLSnumber - text value

ListingPrice - currency

So while it is great to be able to retrieve data so easily from the MLS, the data needs to be both mapped and converted to be useful. All software programs with API technology have this challenge and they have their own way of solving this problem. At AccountTECH, we decided long ago that this time-consuming work, while critical, was too rudimentary to assign to programmers. So we built what we call a “Universal API consumption engine”. This lets us take API data from any source - and have it mapped and converted by entry level staff that are not programmers.

A couple other problems with API technology are latency and data primacy. When software programs are talking to each other thru API, the data is only updated when one program makes an API call to another program. In between API calls, the data between the two systems is always a little out of date. The other issue is about primacy. So lets say AccountTECH has a selling price of $123,456 and DotLoop has a selling price of $234,567. Which one is correct ? This is a question that always needs to be answered when setting up an API integration.

So, in summary when using APIs to make software programs share data, the work always needs to follow these steps:

| Call EndPoint from some Data Source | > | Map incoming data to your data fields | > | Convert data to the type required by your database | > | Evaluate primacy and Insert/Update |

The opportunity for Investors creating a Real Estate vertical is to eliminate all these steps between all the diverse software applications that they acquire and aggregate. This will save countless hours of labor savings in data mapping and transformations. It will provide “always on real-time integrations” between all the software programs in the portfolio. It will ameliorate the need to force users to upgrade out of their legacy software into the new Unified Application vertical they create from their acquisitions.

Similarities in Real Estate data

While there are a variety of Real Estate software categories - each with their unique task focus, the underlying data behind all of these applications is virtually identical. Let’s examine the data needs of a variety of disparate software applications:

AccountTECH (accounting)

LionDesk (crm)

DotLoop (transaction management)

eRelocation (relocation management)

Testimonial Tree (referrals)

MoveEasy (concierge services)

All these different software programs have databases behind the application that capture the same data points. For example, the data points related to a Buyer or Seller. Each of these applications will have fields like first name, last name, email address, phone numbers, source of business. They each will use that data in different ways and for each of these applications the data will have varying significance - but it’s the same data.

For AccountTECH (accounting), the Buyer and Seller information may not be, strictly speaking, necessary - but it can be valuable if it can be captured. Even without Buyer or Seller information, transaction commission income and expense can be calculated, the accounting records can be created and posted to the company’s financials. The transaction can be reported to the associated franchise organization. Some brokerages have commission split agreements based on Lead Source - so these firms require Buyer / Seller Lead source for their accounting. Other brokerages simply want reporting of company dollar based on Lead source - so they also require this information from agents. For LionDesk (crm) a Buyer or Seller is a contact. This contact may have bought or sold a house with the brokerage - or maybe they are only a cold prospect. But they exist as a contact just the same. For Testimonial Tree, a Buyer or Seller is a possible source for a valuable testimonial. For move easy the Buyer is a potential customer for concierge services. Brokerages that own mortgage, escrow or title companies gather Buyer / Seller data for the same reason. For eRelocation, the people in its database are potential buyers or sellers. This software gathers and distributes relocation lead information. Then agents work to turn that relocation lead into a Buyer / Seller. eRelocation links the relocation lead to a Sales transaction. In all of these software programs, ultimately the Buyer / Seller data gets linked to Property data. Each of these programs need varying quantities of property data - but the takeaway is that there is virtually no Property or People related data points that are unique amongst all these various applications.

Architecture

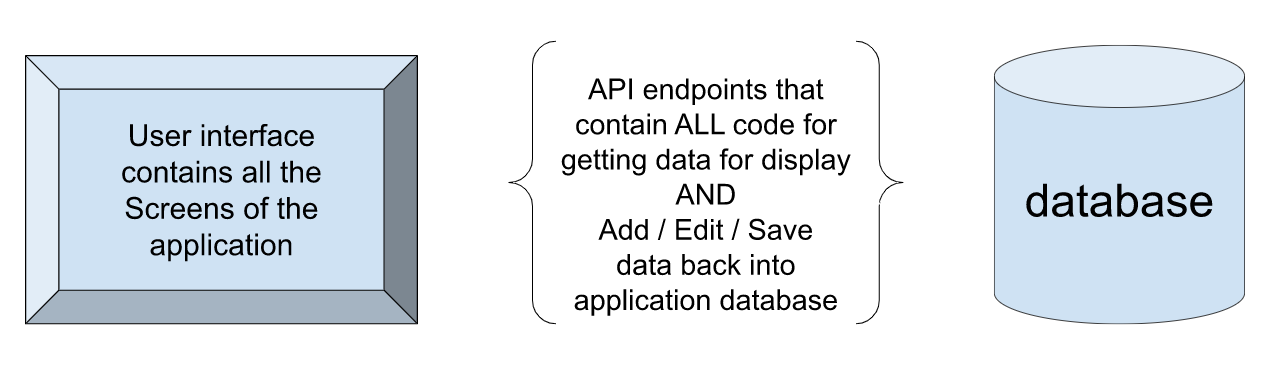

However, any Real Estate software is coded. And regardless of the programming language or the application delivery platform - ALL Real Estate software works like this. To start, the user does this:

| User goes to some screen in the software | > | User asks the software to show some property or person info OR the user fills in the screen with new data | > | Software displays the data |

Then the user does this

| User reviews the data on the screen | > | User edits the data as needed | > | User saves the data |

There is endless variation in HOW the software applications can be coded to accomplish a task. Over the past 30 years, the tools for programmers are better for their primary task: getting, displaying, adding, editing and saving data. The best Real Estate software applications have been developed (or replatformed) to use the newer technology: API for data & Web for display. These technologies maximize the simplicity and multi-use capacity of their applications. When building a Real Estate vertical, the goal should be to have one database serve multiple diverse applications. The key is to get your developers to use API technology internally. This means the different “pieces” of an application should be disconnected and should only “talk” to each other thru API. Here is the fundamental architecture:

This is not how most software from the 1990s (much of it still in use today in Real Estate ) was written. The first version of AccountTECH , Real Easy, Real Ledger - these were all written using MS Access and all 3 layers were together in a single database. Even older software from that era, like LoneWolf, Profit Power, UDS, Lucero all used (or still use) technology like FoxPro or other technologies that keep all 3 layers of an application intertwined in a single file. All these real estate software programs had (or have) at their core an architecture and technology that can’t evolve.

Re-platforming is the term used to describe a “re-building” of an application from the ground up. AccountTECH began re-platforming in 2014 in what we thought at the time would be a 14 month process. It turned out to be a 46 month process when you include the time it took to move our entire client base onto our new platform. In the process, we learned that there is very little in a legacy application that can be salvaged when re-architecting. The database schema and table relationships can be used as a guide to building in a modern relational database. The institutional knowledge of the team about customer requirements/requests and data integrity requirements can be translated into the new application - and that’s it.

The good news is that the hard work of replatforming with the correct architecture, while difficult, makes many things possible and easy. With the right architecture in place, it's easy to extend the features of an application. It's much easier to develop and deploy ongoing improvements. It makes consumption of data from other platforms and applications much easier to instantiate. And perhaps most importantly, it makes the display layer of the application limitlessly interchangeable. So if you want your application to be a desktop winforms application with web-hosted data. Or you want your application to be mobile. Or browser based. Or available as a service for other programmers to use… All of these options are now possible. And they can co-exist simultaneously. In fact, at AccountTECH we have multiple different applications using a variety of display devices and technologies - all built on our foundational architecture. None of this would be possible without the API based re-engineering of our underlying architecture.

In the next section of this article we will outline a roadmap for how to integrate and maximize the ARR from multiple acquisitions. But there is one more architectural problem to discuss that poses a challenge to Real Estate software developers - and that is the hard-coded, “know-it-all” design approach.

The limits of “know-it-all” application architecture

Software is designed to solve problems and automate tasks. When any application’s functionality is designed, someone is the “decider”. This software development project leader is the SME (subject matter expert). They will decide what the code should do and what parameters need to be provided to get the job done.

For instance, in code that calculates an agent net commission, the code may take the agent’s name, their commission plan and the gross commission as parameters - and from these 3 pieces of information it will calculate the agent’s net commission.

Similarly, in processing data from MLS or dotLoop or SkySlope, when data is received, some programming code needs to determine what the incoming data “means” and where it should be stored in your database.

The common practice is that the SME/project leaders tell the programmers how the code should work. Based on their knowledge and experience, they outline all the possible tasks that the new code should be able to handle - and they explain to the programming team exactly how to solve for each scenario. Programming then takes these instructions and builds code that exactly executes the instructions as given.

This approach of having an SME pre-define every possible scenario that the code should handle - I call this “know-it-all”. The problem with “know-it-all” architecture is that starting with the very first draft, the code architecture begins to ossify immediately. In real-life examples, how this happens is like this:

- programmers write code as instructed by SME

- SME tests code and finds problems

- programmers modify original code and adjust it to fix problem discovered by SME

- SME tests code and finds more problems

- programmers modify original code again

- Repeat, repeat, repeat

By the time the code is released to the public, the original code has been modified countless times. The programming team is intimately familiar with the nuances of the code. But more importantly, they are invested in what they have built. In this way, the code base becomes a rigid foundation with a very specific approach. All future enhancements have to find a way to be coded within this specific architecture.

There are many places in programming where there are clear right and wrong answers, in those cases the “know-it-all”, hard-coded option is appropriate. But in Real Estate software, there are two areas where this approach is particularly ill-suited: Integrations (particularly MLS) and Commission calculations.

The problems with this “know-it-all” programming approach are many, but the main issues are:

Code written in this way can only accomplish a very finite number of tasks. In the future, when more flexibility is needed in the software, it sometimes happens that customer requested enhancements cannot be programmed because there is no way to fit them into the original, pre-defined code structure.

This kind of code is often written into the UI ( display screens ) of a software program instead of residing in an API. This means that anytime a change is needed, the entire application needs to be re-compiled and re-deployed.

SME are almost never aware of how strongly their ideas are informed (and limited) by their own prior work experience. So, the solutions they design work for their brokerage model - but not for everyone.

Let's look at the case of Real Estate software that performs MLS integrations. The “know-it-all” approach to writing code requires programmers to “hard code” for every field in every MLS - and there are hundreds of MLS boards to integrate with. As any programmer who has worked with MLS knows, the MLS boards often change / add / delete fields in the data they provide (which causes the integration code to need to be re-written). Additionally, the “lookup” values provided by the MLS are also constantly changing. For instance, If the MLS adds some new listing statuses like “Contingent on Inspection” or “Taking backup offers”, the integration code needs to be re-written and re-deployed. At companies like AccountTECH, ListHub, etc., the programmers find that it takes multiple full-time programmers to constantly debug, alter and maintain MLS data feeds. Ultimately, at AccountTECH, we determined that we needed to create a solution to the MLS data feed maintenance challenges.

The solution we came up with was the opposite of “know-it-all”, hard-coded architecture. Now, instead of manually writing distinct code for each new MLS integration, we use a universal Feed Integration tool that can take data from any source and import it into our database. One key reason this tool is successful is because our designers started with the assumption that we don’t know all the changes the MLS will give us in the future - And we need to be prepared to handle whatever changes come our way. So instead of hard-coding a field-by-field map between MLS data and AccountTECH data, our tool lets users drag & drop fields in a UI to create the field map. Further, since you can never know what new values the MLS will provide, instead of hard-coding for each new value we receive, our tool lets the team “transform” incoming data so that the meaning of the MLS values can be understood and used by AccountTECH software. When developing this Feed Integration tool, we had rule for handling incoming data:

Nowhere in the code are you allowed to hard-code any rules with logic that looks like “when you see this value, always do this”.

The reason is simple. You can never know what data values the MLS is going to provide. So, as soon as you hard-code the logic, you can be sure that some MLS will use different values that mean the same thing. In this way, the logic in the API at AccountTECH is not rigid with finite, pre-defined logic. Instead, it dynamically determines how to process incoming data based on the latest configurations setup in the UI by non-programmers.

Using the “know-it-all”, hard-coded architecture for commission calculations is similarly inappropriate. There is no SME or programmer that will ever be able to write predefined rules for every possible commission schema that Broker/Owners and recruiters dream up.

In the last 5 years, approximately 15 newer “back-office” applications have emerged. While these new entries don’t have comprehensive accounting functionality, they are marketed as “complete” because they include commission calculation technology and MLS feed integration. Anecdotal stories about commission calculation limitations suggest that they were coded with “know-it-all” architecture. The problem, as stated above, is that an SME’s knowledge and experience is informed by their work experience. Let’s say an SME has always worked within the Sotheby’s real estate franchise, there is an inescapable tendency (which all of us have) to assume what we know will apply to everyone else. Actually, it is almost impossible to avoid the trap of programming for “what-you-know”. So a Project manager that has only worked for Sotheby’s will create a Commission calculator that only works for the kinds of commission plans they have seen at Sotheby’s.

When it comes to Commission calculations, AccountTECH took a similar approach to Feeds, by creating a Commission calculation engine. Since you can never know all the crazy commission plans a brokerage will offer, it is essential to not hard-code the commission rules. We found that instead, you need to create an API that do the following:

- handle an unlimited number of exceptions to the standard commission rules

- allow the brokerage to have an unlimited number of conditions that define when a commission rule applies

- allow the brokerage limitless customization of what is included/excluded from the “commission basis”. The value calculated for “commission basis” is the core of any CAP or Graduated plan

- support any combination of “off-the-top” deductions to be calculated before the commission split

MLS feeds and Commission calculation are two examples of how the logic in real estate software code can be written in a way that avoids the rigidity that limits their functional capacity.

Solved: Building the “All-in-One” Real Estate vertical

Since all Real Estate software programs use essentially the same data. The ubiquity of API technology makes it simple to create real-time integration between multiple real estate software acquisitions (owned by an investment group) by having them all read/write to the same database and use a shared library of API endpoints.

So let’s say an investment group has three software companies that they are merging: Accounting/Back Office with CRM with Transaction management. To start, they select whichever of the three programs that has the most developed underlying database and most comprehensive library of API endpoints.

Now lets say they decide to first integrate the CRM with the Accounting/Back Office - and build on the database of the Accounting/Back Office. It’s a pretty straightforward task to map each field in the CRM database to a corresponding field in the Accounting/Back Office database. Then, the developers will determine if there are any fields in the CRM database that don’t exist in the Accounting/Back Office database. Once determined, they can add the missing fields into the Accounting/Back Office database and port the data. The programmers have now created a Unified database. This is exactly the process we used in 2017 when we re-platformed AccountTECH and built darwin on a new API/SQL database architecture.

For the CRM software, nothing in the UI needs to change. And nothing in the CRM customer experience needs to change. The only changes that need to be made are invisible to the CRM customer. For programming, the only work is to change how the CRM gets data in and out of the now Unified database.

In the CRM software, the programmers will use the already existing API endpoints that the Accounting/Back Office programmers wrote to connect the Accounting/Back Office UI to the Unified database. Using this approach, the CRM programmers only need to change the lines of code in their application that GET data, SAVE data and DISPLAY data. They don’t need to write the API code for retrieving the data or saving the data - since this is already coded in the pre-existing API endpoints. The CRM programmers only need to learn how to call the API endpoints already written by the Accounting/Back Office programmers. And they can have fields added to the API endpoint if needed.

This approach to migrating the core data into one Unified database can then be extended for the Transaction management software (and every other real estate software acquisition). So, if an investment group then buys another real estate software program for marketing material, or for form-filling, or for sending out surveys/testimonials - all these program’s underlying data can be migrated into the Unified database. All the acquired software applications can all take advantage of the already existing (and evolving) library of API endpoints. This radically reduces the amount of time needed to integrate the applications. But more importantly, it completely changes the existing paradigm for “integrations”. In the current real estate software market, most software applications are owned & operated by distinct entities. When integrated, they use API technology to pull / push data and incorporate it into their distinct application database. While this API integration approach is a big improvement over the limitation of real estate software in the past, it still leaves software developers dealing with two issues. First, the API data transferred between separate software companies is never “real-time”. Second, there can always be conflicts between the data in the two systems. Let’s say the Transaction managements software says the price of a listing is X, but the Accounting / Back Office software has the price as Y. It’s always a challenge to determine which is correct.

By using a Unified database and a shared library of API endpoints, an unlimited number of applications can all have “real-time” data all the time. Whenever any integrated application writes to the Unified database, all the other connected applications will have that information immediately. This approach opens a huge range of opportunities for expansion and customer satisfaction.

Possibilities

When an investment group buys multiple applications, there is an innate motivation to attempt to put them all together in one unified UI. And that probably should be the ultimate goal when trying to build a real estate software vertical. But it isn’t essential to get all the benefits of an integration - and it may not even be the approach that generates the maximum ARR.

There is a well known reality that real estate agents want to continue using the software that they know. Hypothetically, let's say a broker attempts to force their agents to move from appFiles (which has huge agent loyalty) to another great alternative like dotLoop, they may find they have a revolt on their hands. So, I don’t think it is necessarily true that customers would immediately flock to a new UI that integrated multiple applications. Let's say an investment group purchased MoxiWorks, AccountTECH, eRelocation & Testimonial Tree. The employees and agents of the brokerage may choose to continue using the separate UI of each of these applications - because they are already trained. But it is definitely true that users would find it irresistible to purchase from a Suite of applications that shared data in real-time. Users could keep the UI that they know and are trained in - while enjoying real-time data in all the applications in their tech stack.

On the other hand, eventually the market will demand a real estate vertical. And eventually all the employees and agents in a brokerage will migrate to a unified UI that includes all the functionality that is now contained in disparate applications.

The ARR implications of integration are also worth considering. Lets say a brokerage has separate subscriptions to all four of these programs: MoxiWorks, AccountTECH, eRelocation & Testimonial Tree. It is worth considering how much they would be willing to pay for a single, unified UI that incorporates all the features and technologies of these applications. Perhaps the cumulative ARR from each of these applications separately would be greater than the sales on a single unified UI.

Investors need to consider the path to integration. There is a problem with the notion that an “all-in-one” solution can be built in its entirety and not released for sale until complete. Versions of this have been tried and failed by big franchises like Realogy & Berkshire Hathaway. Instead, the development process, from the start, needs to be incremental and iterative.

The experience we have developed in building darwin.web has been truly instructive for us. All the ideas we have about how to build a real estate vertical have emerged from this experience. Back in 2014 when we began re-platforming using API and SQL and newer technology, we decided to first build our UI using winForms. While we were hoping we could build the UI on the web, at that time there were some technical issues with delivering some features that an accounting software requires. But more importantly, we knew that if we built a disconnect middleware by creating a comprehensive API endpoint library, then subsequent versions of our application would be free to develop in any UI we chose. Fast forward to 2021 and now we have built darwin.cloud. This is an entirely browser based version of our winforms application. Approximately 80% of the winforms application is already replicated into darwin.cloud. The main discovery is that we could release pieces of darwin.cloud as they were completed without waiting for the entire application to be ported to the browser. So our winForms application is still delivered to clients AND the browser version of our application is also delivered to our clients. The browser version has many benefits including ease of login and speed. But most importantly, the complete application doesn’t need to be fully in the browser for the client to begin getting the benefit of the portions of the application that are already available in darwin.cloud. For AccountTECH we also get iterative feedback from early adopters. This approach of allowing multiple UI to write in real-time to a Unified database is what we are currently doing in the development of darwin.cloud - and it serves as a model for how to unify an unlimited number of applications.

So, applications can remain distinct while being fully integrated with real-time data sharing through a unified database. But eventually, stakeholders will want disparate applications to be pulled together in a unified UI. This middleware API approach with shared endpoints will make developing a comprehensive vertical fairly easy. Since the institutional knowledge and topic specific SME exist within each software application team, the separate teams can keep their existing UI up and running (and generating income) while simultaneously adding the UI screens to an evolving Real Estate vertical. By the time the programmers of any acquired software application are ready to help build the unified application, they already have many things figured out:

- they know the specific needs of their application

- they know the flow that needs to exist in the UI of the application

- they have learned how to use the shared API endpoint library to drive the application

This makes these programmers uniquely qualified to create new UI screens in the evolving real estate vertical. They can publish iterative versions of the screens they develop in the vertical without any interruption in the functionality of their existing applications. As they are developing, they can ask users to beta the new screens they create in the vertical. And as the features and functionality migrate to the vertical, users will have choice. If the brokerage employees and agents prefer to continue to use the UI that they know and love - there is no reason to require them to switch software. For other clients, especially new clients or new brokerages, they may be drawn to the Unified real estate vertical where multiple, well-developed functionalities exist in a consistent visual experience.

This API based, middleware, Unified database architecture has the potential to build a rich, deep real estate vertical. It will avoid the prevalent trend in real estate software of developers to build applications that are “a mile wide and an inch deep”. And it should maximize ARR by allowing customers to stay with whichever UI is most comfortable for them - while getting all the benefits of a complete enterprise application solution.

Mark Blagden is the founder of AccountTECH LLC. He brings over 30 years of experience to the Real Estate accounting industry. Starting in 1990, AccountTECH began as an outsourced accounting firm for real estate. Over the years, it has grown into a leading solution for brokerages requiring accurate detailed financial analysis, multi-company accounting & operation efficiency.

To learn more, reach out at mark@accounttech.com

Ready to evolve?

Request a demo or learn more about the power of darwin.